Dongwei Jiang

LLM agents, reasoning and self-improvement. Previously focused on speech

Applied Scientist at Amazon. Previously master's student at JHU

Research Interest

I am currently working on reinforcement learning and agents, particularly the intersection of these two areas — using RL to train agentic models. Tool integration and the ability to interact with the environment have fundamentally changed what AI systems can accomplish, and RL has emerged as the dominant approach for enhancing these capabilities.

I’m also broadly interested in reasoning. In the realm of reasoning, I’ve worked on:

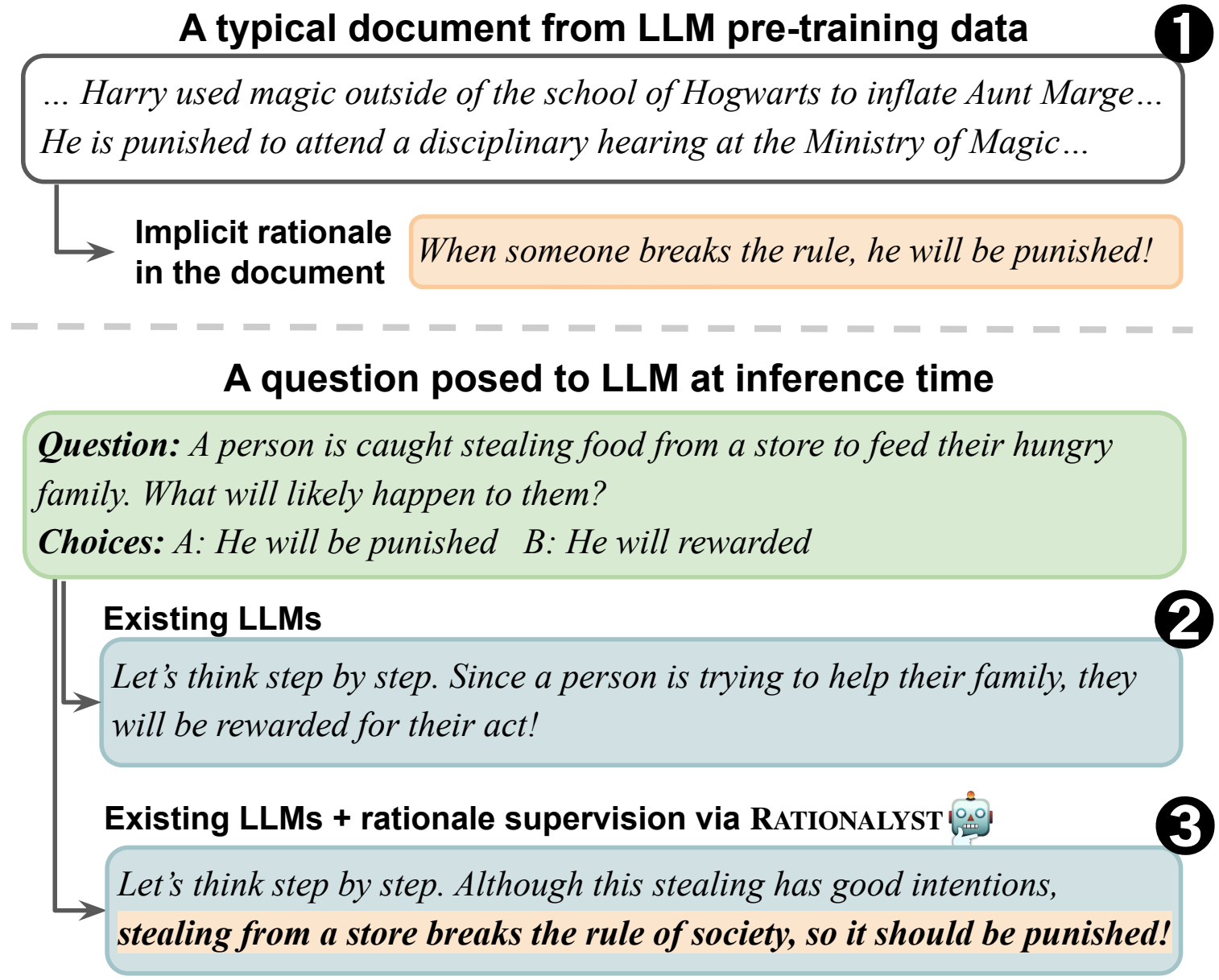

- Building general-purpose verifier through rationale extraction from unlabelled data to provide process supervision during reasoning [1] (mentioned in Lilian Weng's blog)

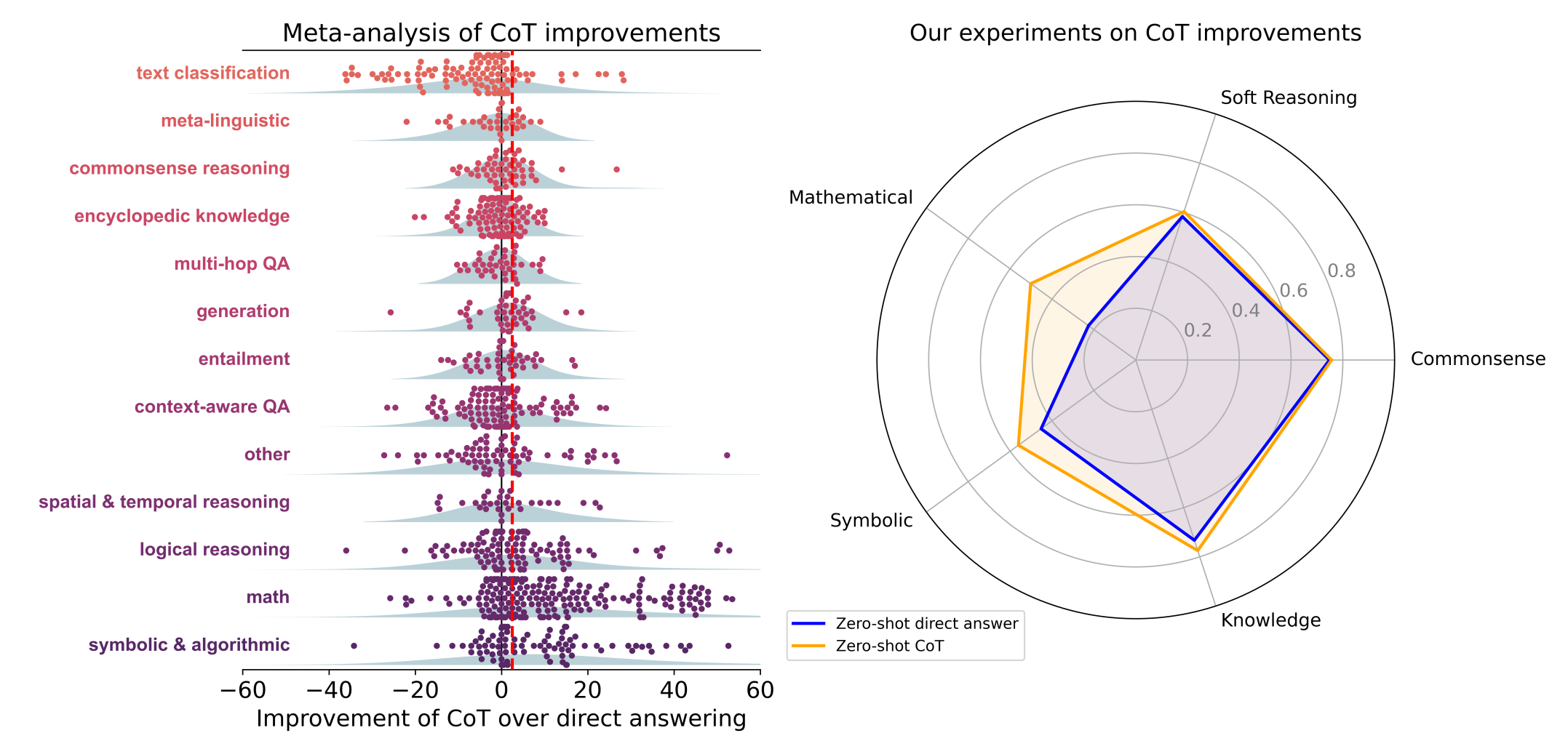

- Investigating the effectiveness of CoT prompting across 100+ papers and 20 datasets and discovering CoT benefits mainly math/symbolic reasoning tasks [2] (discussion with Jason Wei)

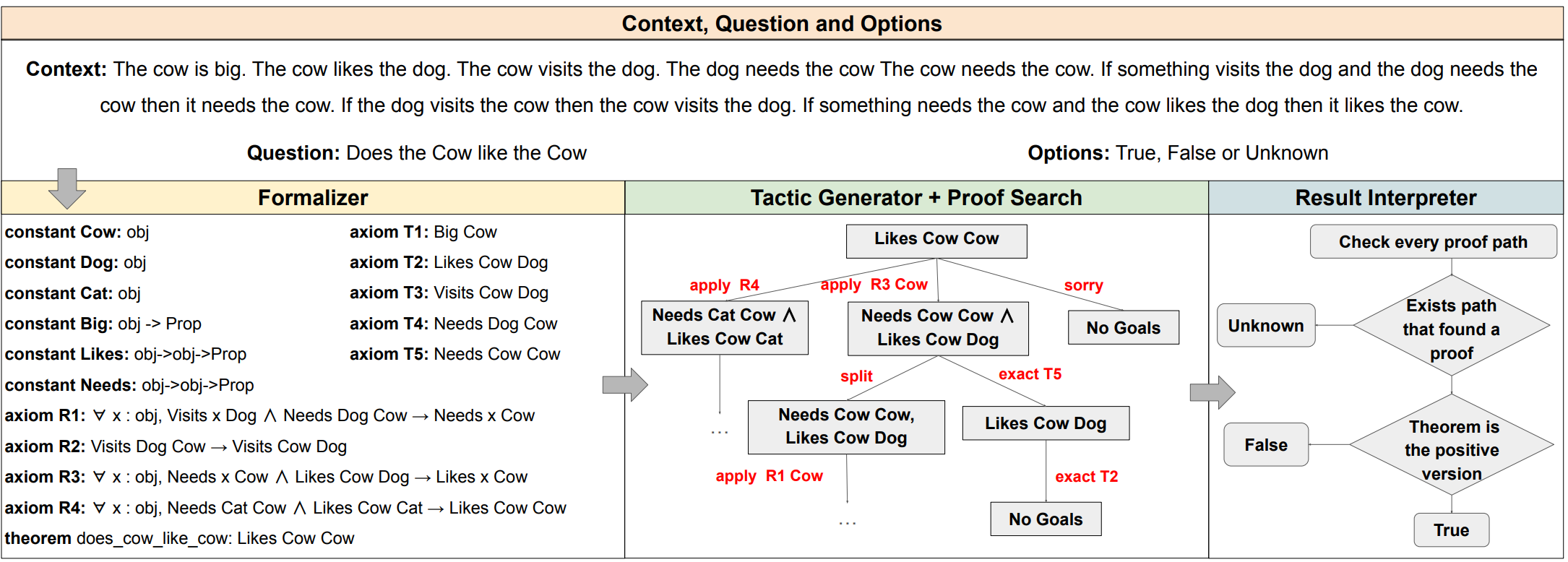

- Theorem proving and Logical reasoning that uses theorem prover Lean to help with the reasoning process [3]

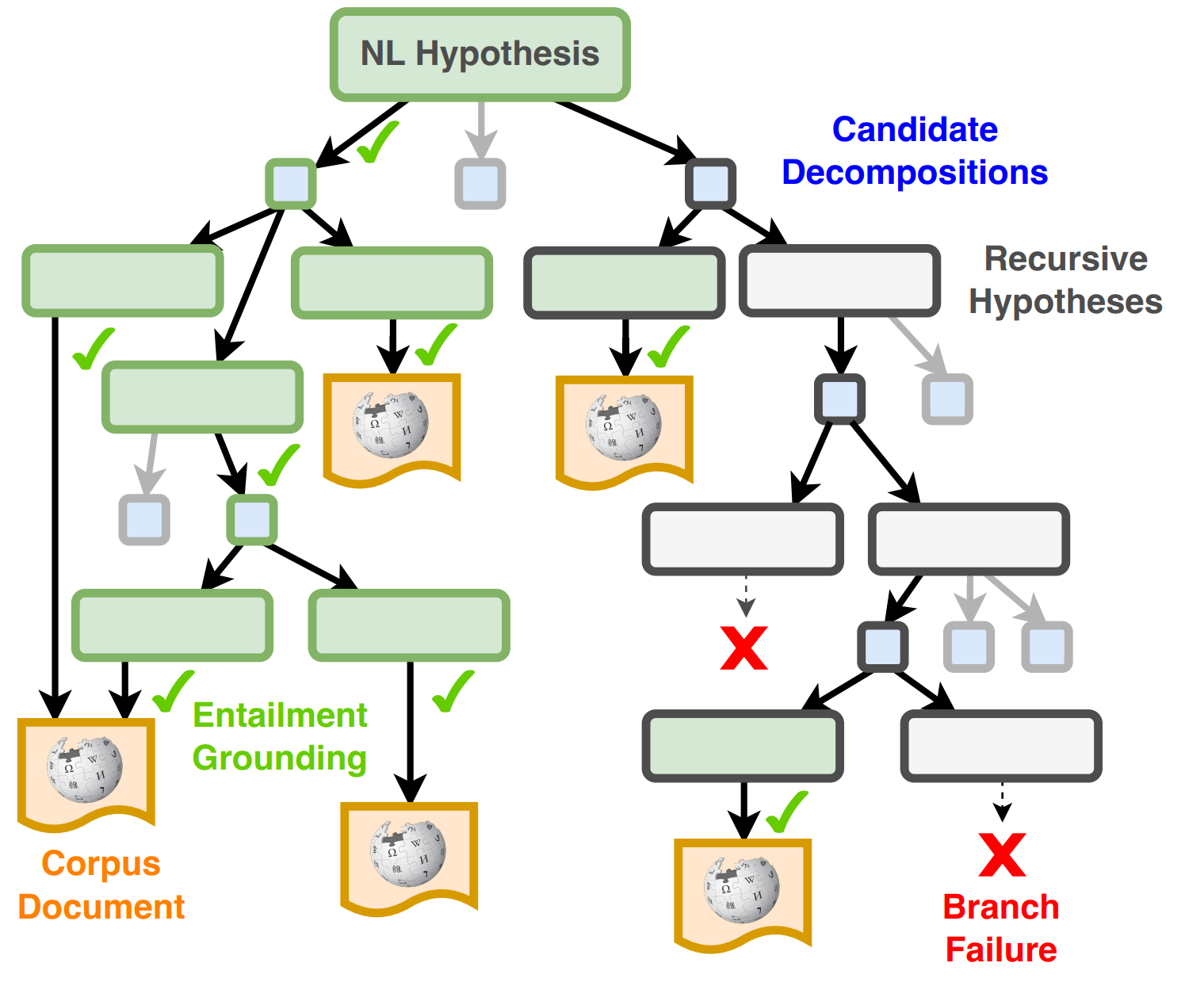

- Decompositional entailment that formulates a consistent and theoretically grounded approach to annotating decompositional entailment dataset [4]

Another area I’m interested in is the self-improvement capability of LLMs (and LLM agents). If we begin with the “end” (superintelligence/AGI) in mind, relying on human input won’t get us there. We need to teach models to interact with the environment and self-improve. Within this area, I’ve worked on:

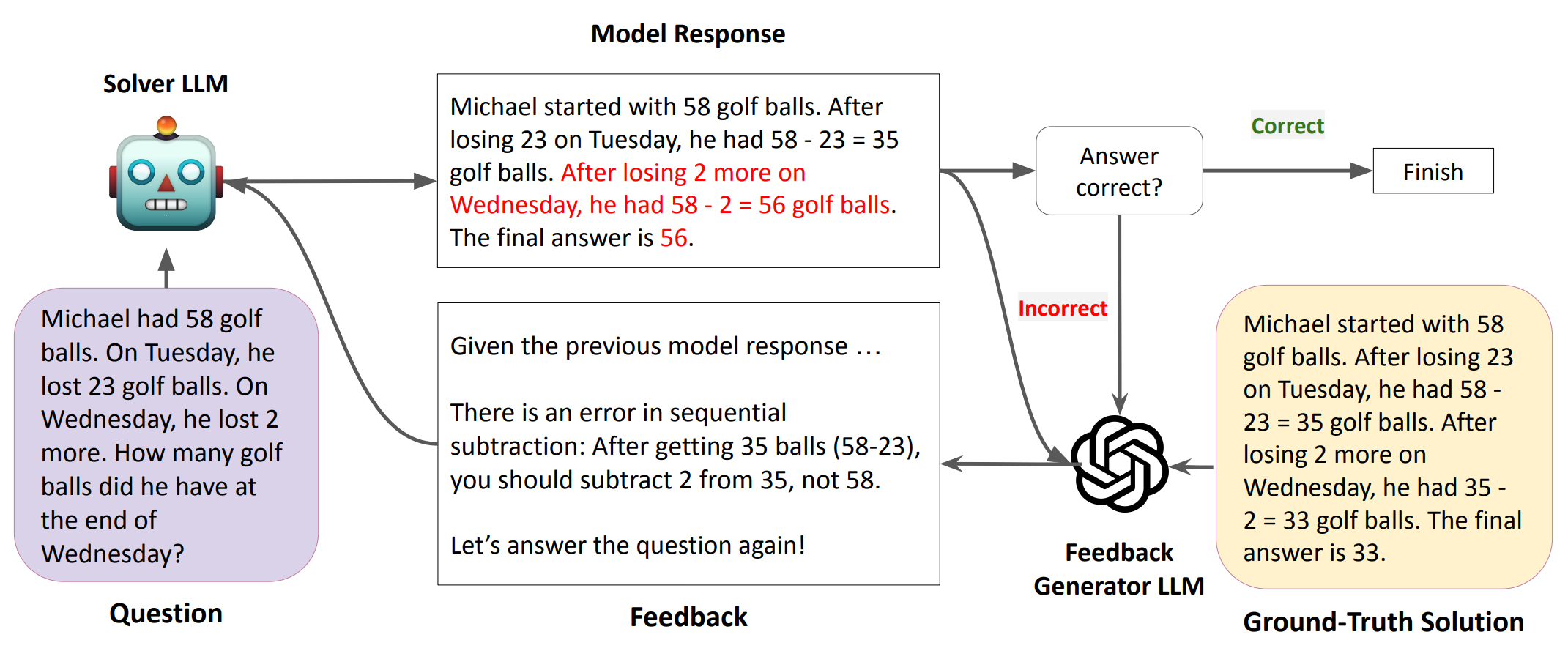

- Understanding the reason that prevents LLM from effective self-improvement [5]

- Probing the limits of self-improvement even with high-quality feedback [6]

More About Me

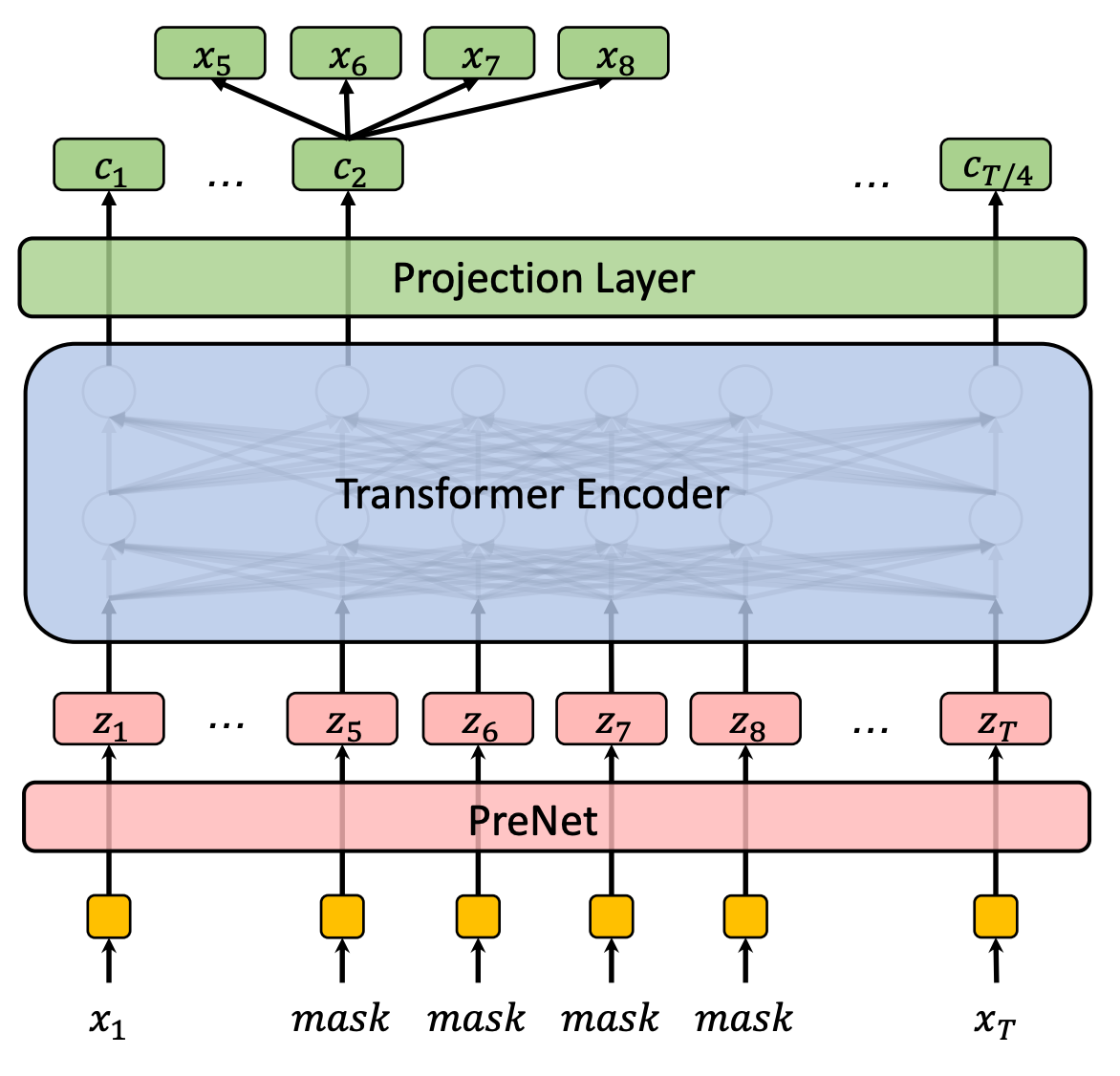

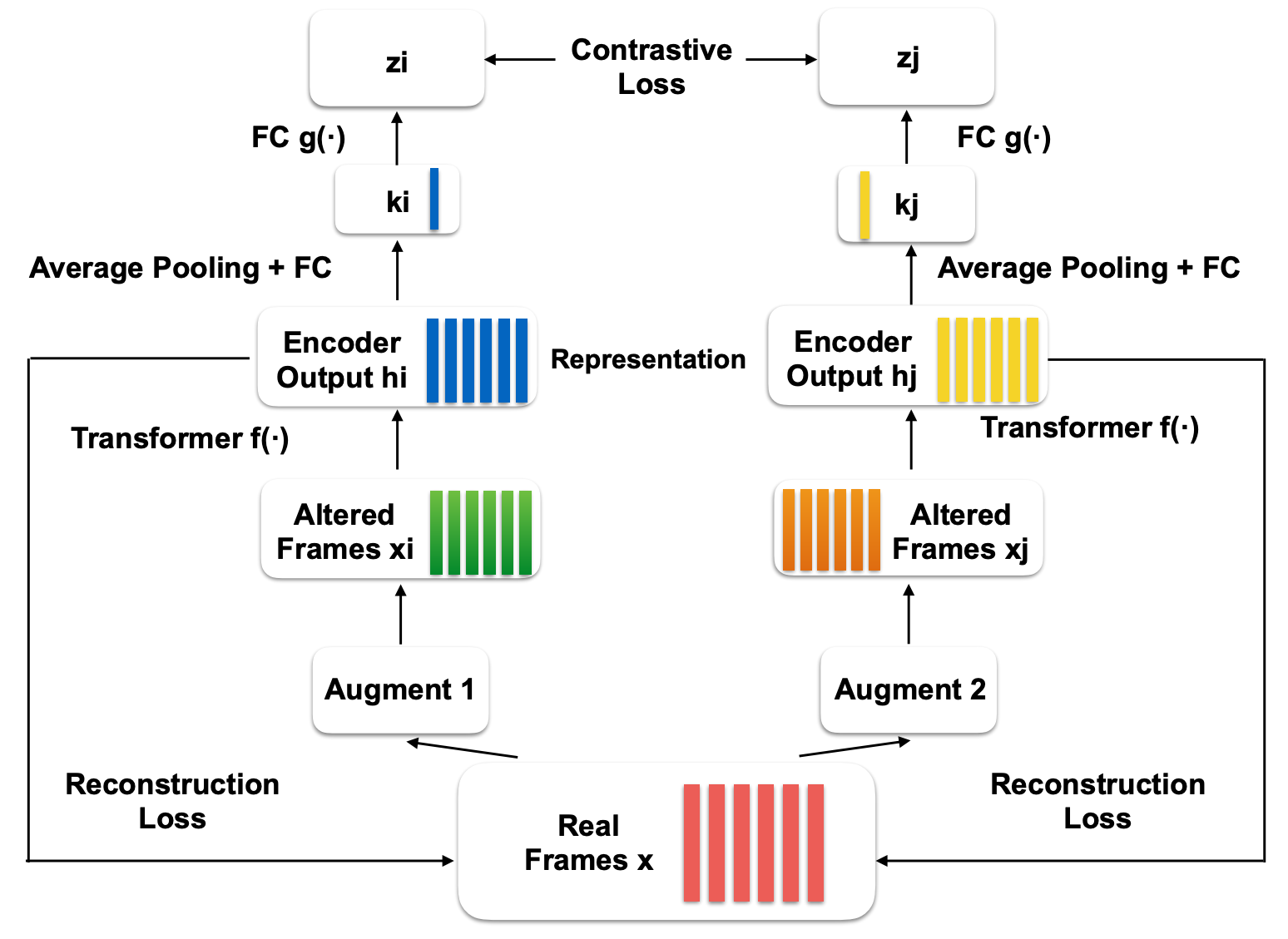

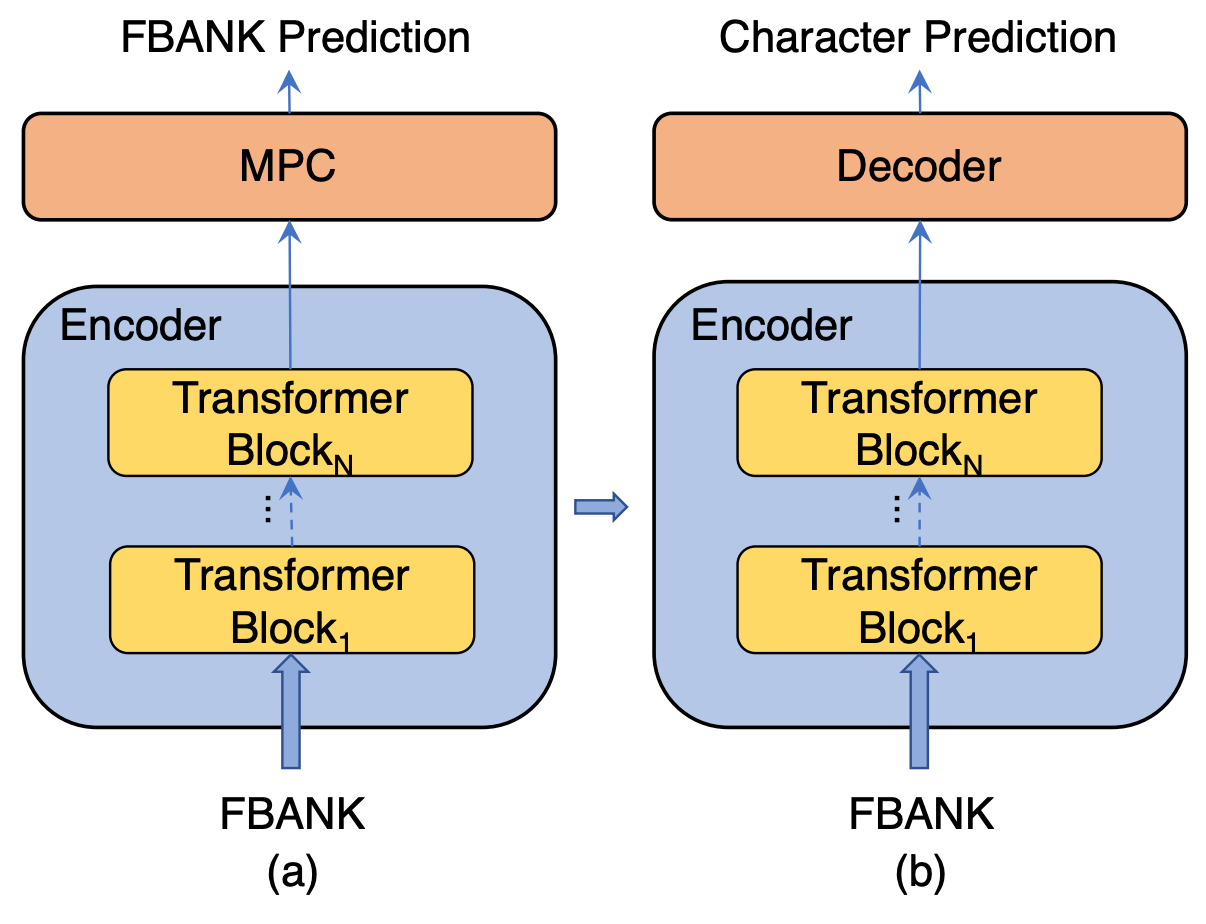

Prior to my current role, I spent six years in industry working on speech processing, where I pioneered self-supervised learning approaches for speech (like masked predictive coding [7] and Speech SimCLR [8]) and was among the first to deploy end-to-end ASR systems at production scale. Following the release of ChatGPT, I became deeply interested in foundation models and their potential, which motivated me to return to academia and complete my master’s degree at JHU. There, I worked with Professor Daniel Khashabi and Benjamin Van Durme, and also collaborated with Professor Shay Cohen from Edinburgh and Greg Durrett from NYU on various research projects. Currently, I’m working as an Applied Scientist at Amazon, where I continue to pursue research in foundation models and related areas.

In my free time, I sometimes play Civ 6 or Hearthstone. I also rotate between tennis, badminton, swimming, and bouldering every day—well, more like every three or four days, but who’s counting? Research shows racquet sports can reduce mortality risk by 47% and swimming by 28%—so between all these activities, I’m either achieving immortality or just really bad at math :)

I’ve noticed there’s something puzzle-like about all these activities—whether it’s planning civilizations, crafting the perfect deck, or figuring out a tricky climbing route—which probably explains why I enjoy them alongside my research work.

Selected Publications

-

LeanReasoner: Boosting Complex Logical Reasoning with Lean, NAACLIn Proceedings of the 2024 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies (Volume 1: Long Papers), NAACL 2024, Mexico City, Mexico, June 16-21, 2024, Jul 2024

LeanReasoner: Boosting Complex Logical Reasoning with Lean, NAACLIn Proceedings of the 2024 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies (Volume 1: Long Papers), NAACL 2024, Mexico City, Mexico, June 16-21, 2024, Jul 2024

![self-[in]correct.png](/assets/img/publication_preview/self-%5Bin%5Dcorrect.png)